...making Linux just a little more fun!

Mailbag

This month's answers created by:

[ Amit Kumar Saha, Ben Okopnik, Neil Youngman, Rick Moen, Thomas Adam ]

...and you, our readers!

Gazette Matters

lists.linuxgazette.net mailing list memberships reminder

Rick Moen [rick at linuxmafia.com]

Mon, 1 Dec 2008 10:00:59 -0800

An lg-announce subscriber was evidently surprised by the monthly

reminder e-mail that starts out:

This is a reminder, sent out once a month, about your

lists.linuxgazette.net mailing list memberships. It includes your

subscription info and how to use it to change it or unsubscribe from a

list.

----- Forwarded message from Rick Moen <rick@linuxmafia.com> -----

Date: Mon, 1 Dec 2008 09:58:06 -0800

From: Rick Moen <rick@linuxmafia.com>

To: [subscriber address]

Cc: mailman-owner@lists.linuxgazette.net

Subject: Re: Fwd: lists.linuxgazette.net mailing list memberships reminder

Quoting [subscriber]:

> I don't think its a very good idea to send out user passwords in emails.

[subscriber] --

The Mailman Web pages did advise you at the time you signed up that

you should use a low-security password. That's why. Actually, here's

the exact, literal quotation:

"You may enter a privacy password below. This provides only mild

security, but should prevent others from messing with your

subscription. Do not use a valuable password, as it will

occasionally be emailed back to you in cleartext."

In any event, if you do not want your password periodically sent in a

reminder mail, all you have to do is disable that option for your

subscription. Login at

http://lists.linuxgazette.net/mailman/listinfo/lg-announce (using, yes,

your subscription password), and you can toggle off that setting among

others.

----- End forwarded message -----

Our Mailbag

Corrupt dpkg?

Amit k. Saha [amitsaha.in at gmail.com]

Thu, 4 Dec 2008 13:56:58 +0530

Hi all,

Greetings!

I am using Ubuntu 8.10. I am facing strange issues on my installation.

I cannot install any package from the repo:

sudo apt-get install tar

[sudo] password for amit:

Reading package lists... Done

Building dependency tree

Reading state information... Done

Suggested packages:

ncompress

The following NEW packages will be installed:

tar

0 upgraded, 1 newly installed, 0 to remove and 98 not upgraded.

Need to get 0B/338kB of archives.

After this operation, 2298kB of additional disk space will be used.

dpkg-deb (subprocess): failed to exec tar: No such file or directory

dpkg-deb: subprocess tar returned error exit status 2

dpkg: error processing /var/cache/apt/archives/tar_1.20-1_i386.deb (--unpack):

subprocess dpkg-deb --control returned error exit status 2

Errors were encountered while processing:

/var/cache/apt/archives/tar_1.20-1_i386.deb

E: Sub-process /usr/bin/dpkg returned an error code (1)

This is what happens.

What might have probably caused this is that I removed 'nautilus' and

put on 'Dolphin' file manager. I am not sure.

Any thoughts how can I fix this?

Much appreciated.

Thanks,

Amit

--

Amit Kumar Saha

http://amitksaha.blogspot.com

http://amitsaha.in.googlepages.com/

Skype: amitkumarsaha

[ Thread continues here (4 messages/3.89kB) ]

Teacher Throws Fit Over Student's Linux CD

Ben Okopnik [ben at linuxgazette.net]

Mon, 22 Dec 2008 10:31:42 -0500

[from austinist, via RISKS Digest]

http://austinist.com/2008/12/10/aisd_teacher_throws_fit_over_studen.php

Detention for the student and legal threats from a clueless teacher.

Hilarious.

--

* Ben Okopnik * Editor-in-Chief, Linux Gazette * http://LinuxGazette.NET *

[ Thread continues here (10 messages/14.50kB) ]

Tesseract at Distributed Proofreaders

Jimmy O'Regan [joregan at gmail.com]

Mon, 22 Dec 2008 14:30:29 +0000

"Lectures on Russian Literature: Pushkin, Gogol, Turgenef, Tolstoy" by

Ivan Panin is being proofread at Distributed Proofreaders, using OCR

provided by Tesseract:

http://www.pgdp.net/c/project.php?id=projectID49430d0ed814f (for

anyone already registered).

The project comments aren't very promising though: "This is a

tesseract test project. I know the OCR is rather gruesome - please

rather work on something else than shoot me." and "This project might

go through P1 twice."

(I haven't seen anything other than the usual set of OCR errors, though).

Thieves Winning Online War, Maybe Even in Your Computer (NY Times)

Ben Okopnik [ben at linuxgazette.net]

Mon, 22 Dec 2008 10:17:28 -0500

[Article in NYTimes by John Markoff, via the RISKS Digest]

Despite the efforts of the computer security industry and a half-decade

struggle by Microsoft to protect its Windows operating system, malicious

software is spreading faster than ever. The so-called malware

surreptitiously takes over a PC and then uses that computer to spread

more malware to other machines exponentially. Computer scientists and

security researchers acknowledge they cannot get ahead of the onslaught.

(Full story here:

http://www.nytimes.com/2008/12/06/technology/internet/06security.html)

Gosh, that last sentence has me all terrified. "Cannot get ahead of the

onslaught", even.

I remember the last time I had a virus. I downloaded it, compiled it (in

a chroot-jail sandbox, just in case), and played with it for a bit - it

created a neat little "/tmp/.bliss" log file for me to read. Then, I ran

it again with an option that told it to go away, and it was gone.

It didn't make me feel onslaughtered (I think that's the proper

verb... <barbie_mode>English is *hard!</barbie_mode>) at all. I don't

know what those other people's problem is.

Seriously - it's a badly-written article, with lots of name dropping and

little actual research. The author doesn't even mention Linux (or BSD,

or any options beyond OS/X.) The general point is that the Bad Guys are

making lots of money ripping off the poor little users... but this is

just the same old three-card monty multiplied by a few million naive

hayseeds just off the train. Yep, there are more con men and more

suckers, as well as more unsuspecting mules. [shrug] It's called a

"growing population" and "better communications". That, as always,

presumes either A) an increased ability to swim with the sharks or B)

accepting, however unwillingly, your role as an hors d'oeuvre . There

is no option C - and never has been.

I guess the NYT needs some low-calorie filler for their Technology

section once in a while...

--

* Ben Okopnik * Editor-in-Chief, Linux Gazette * http://LinuxGazette.NET *

[ Thread continues here (3 messages/4.94kB) ]

[SEMI-OT] This is to all the Gmail users out there...

clarjon1 [clarjon1 at gmail.com]

Fri, 12 Dec 2008 14:28:04 -0500

Hey, Gang, long time no word from me.

I was using StumbleUpon, when I came across this link:

http://www.webmonkey.com/blog/Why_You_Should_Turn_Gmail_s_SSL_Feature_On_Now

In short, it's a notice that:

a) Google has enabled you to use HTTPS only for GMail, to make it more

secure, and

b) There's a new tool to allow malicious users to gain access to your

GMail if you don't use HTTPS/SSL mode.

To enable, click the Options link at the top of the page, then scroll

down to the last option. For me, this was the HTTPS/SSL enabler.

I hope this helps someone!

Take care all, and safe browsing!

--

Jon

What not to do when admin of Unix/Linux box

Martin J Hooper [martinjh at blueyonder.co.uk]

Mon, 08 Dec 2008 09:24:50 +0000

Im sure you guys have done at least one of the things on this list...

http://www.cavecanen.org/linux/humour/horrorstories.txt

[ Thread continues here (4 messages/3.39kB) ]

In RI federal court - Harvard vs. the RIAA

Ben Okopnik [ben at linuxgazette.net]

Tue, 16 Dec 2008 07:45:06 -0500

Interesting court case involving the RIAA.

[ ... ]

[ Thread continues here (13 messages/45.81kB) ]

Talkback: Discuss this article with The Answer Gang

Published in Issue 158 of Linux Gazette, January 2009

Talkback

Talkback:123/smith.html

[ In reference to "A Short Tutorial on XMLHttpRequest()" in LG#123 ]

Luis Navarro [navarro_luis_a at yahoo.com.ar]

Fri, 5 Dec 2008 07:56:46 -0800 (PST)

Excelent tutorial! Thanks verry much! It is all we need to understand AJAX

in a few minutes.

Talkback:157/dokopnik.html

[ In reference to "Book Review: CUPS Administrative Guide" in LG#157 ]

Neil Youngman [ny at youngman.org.uk]

Fri, 5 Dec 2008 10:25:16 +0000

One for pedants corner here.

I noticed the use if the verb "administrating" in the article, where to me the

natural verb is "administering" and I started to wonder where this variant

came from. I have seen this before in many technical articles and I would

like to know whether all USians use administrate instead of administer, or

whether it is specific to the administration of computers?

Neil

[ Thread continues here (8 messages/11.96kB) ]

Talkback:157/anonymous.html

[ In reference to "Keymap Blues in Ubuntu's Text Console" in LG#157 ]

Kapil Hari Paranjape [kapil at imsc.res.in]

Sat, 6 Dec 2008 09:07:31 +0530

Hello,

The article on Ubuntu and Keymap blues is (IMHO) too heavy

on opinion and too sparse in solutions. Here are some more detailed

comments:

1. The author drops openSUSE because of KDE 4.1 and feels that

openSUSE's support for Gnome is poor. So on to Ubuntu, only

to worry about the console keymap. This is surely odd as

one can use a terminal inside Gnome and configure its

keymap using X or gconf or whatever.

By the way the author talks about text-mode keymap when what is

meant is the vt-console keymap.

2. The author could have consolidated the complaints about

console-tools in one place and then gone on to explain how kbd is

better and can be configured. Instead we have few hints on how to

(for example) configure kdb to use the Dvorak keyboard (a problem

stated at the beginning) and lot of complaints about differences

between console-tools and kbd keymappings for function keys.

3. A sample keymap which is "sized down" and does fit the author's

needs would have been a good attachment to the article.

4. The author ends with "However, to make them consistent, you need

consensus." I would add that "To obtain consensus, you need a good

consistent solution to all the woes of the Linux console." Is the

author willing to propose such a solution?

A bit annoyed-ly,

Kapil.

--

[ Thread continues here (3 messages/3.42kB) ]

Talkback: Discuss this article with The Answer Gang

Published in Issue 158 of Linux Gazette, January 2009

2-Cent Tips

Two-cent Tip: executing two different consecutive commands with same set of parameter

Mulyadi Santosa [mulyadi.santosa at gmail.com]

Sat, 6 Dec 2008 10:34:42 +0700

Bash has feature called "word designators". I find it useful if you

want to execute a command following the previous one with same set of

parameters.

For example, instead of:

# ls -l /boot/vmlinuz-2.6.22-14-generic

# stat /boot/vmlinuz-2.6.22-14-generic

I could simply type:

# ls -l /boot/vmlinuz-2.6.22-14-generic

# stat !$

It would recall the last parameter of "ls"

Another variation. Let's say first you want to do:

# cat text-1.txt text-2.txt

but then you realize the text are too long, so you turn into "tail":

# tail text-1.txt text-2.txt

OR

# tail !*

It would recall all the parameters except the first one (technically,

the zeroth one actually).

regards,

Mulyadi.

2-cent Tip: Temporary kill files

Ben Okopnik [ben at linuxgazette.net]

Tue, 30 Dec 2008 20:31:41 -0500

Occasionally, there are times that I don't feel like seeing someone's

email in my inbox for a while - for whatever reason. At those times,

"procmail" is very useful.

First, you need to calculate the "timeout" interval in seconds and add

it to the current date and time. Fortunately, the 'date' command makes

this easy:

# There are 86400 seconds in a day

ben@Tyr:~$ days=3; echo $(($(date +%s) + 86400 * $days))

1230944808

You can now use that value to determine whether that interval has

elapsed - i.e., you can create a filter within your "~/.procmailrc" file

based on that test:

:0:

##### Specify the 'From' or 'From:' address

* ^From.*joe@rant\.org

##### You can also narrow it down by subject if desired

* ^Subject.*Cut\ the\ crap

* ? test $((1230944808 - $(date +%s))) -gt 0

##### If all of the above criteria match and the difference between the

##### current date and the end date is greater than 0, discard the email

/dev/null

May everyone's holidays be merry, bright, and peaceful.

--

* Ben Okopnik * Editor-in-Chief, Linux Gazette * http://LinuxGazette.NET *

2-cent Tip: audio ping

Ben Okopnik [ben at linuxgazette.net]

Sun, 30 Nov 2008 14:40:40 -0500

If you occasionally can't ping a host on your network, and suspect that

a wonky connection is to blame, troubleshooting can be a pain: watching

the output of "ping hostname" while crawling around in the dust under a

desk is, to put it mildly, a challenge. Enter the "-a" option to 'ping',

added by one of the people on the "iputils" team, Patrik Schilt: when

there's no connection, "ping -a hostname" simply sits and waits, but as

soon as one appears, it starts emitting audio beeps. That's a wonderful

"wishlist" feature, and should significantly improve the life of any

sysadmin who has ever groveled amongst the dropped corn chips and the

roach poop.

--

* Ben Okopnik * Editor-in-Chief, Linux Gazette * http://LinuxGazette.NET *

[ Thread continues here (25 messages/35.92kB) ]

2-cent Tip: 'hostwatch'

Ben Okopnik [ben at linuxgazette.net]

Sun, 30 Nov 2008 16:26:57 -0500

While I was writing my last tip (about audible ping), my wife said "you

know, what would be really useful is something that would start

pinging when the connection went down!" An easy sentiment to understand,

since we connect our laptops via a pair of cables with a cranky old

connector (and my laptop's Ethernet port's retainer is broken, meaning

that cables won't stay in it for long.) So, I cranked out this useful

little script, "hostwatch". It takes a hostname or IP as an argument,

tells you that it's watching the specified host, starts audibly

pinging when a connection goes down, and stops pinging (resets itself

back to watch status) when the connection comes back up. All in all, a

very useful gadget for the Okopnik family network.

#!/bin/bash

# Created by Ben Okopnik on Sun Nov 30 15:41:06 EST 2008

[ -z "$1" ] && { printf "Usage: ${0##*/} <hostname> \n"; exit; }

echo "Watching host '$1' - press 'Ctrl-C' to interrupt..."

while :

do

while fping -c 1 "$1">/dev/null 2>&1

do

sleep 1

done

until fping -c 1 "$1">/dev/null 2>&1

do

echo -e "\007Connection to '$1' is DOWN."

sleep 1

done

echo "Connection to '$1' is UP again."

done

Note that this script requires "fping" rather than just "ping".

--

* Ben Okopnik * Editor-in-Chief, Linux Gazette * http://LinuxGazette.NET *

Talkback: Discuss this article with The Answer Gang

Published in Issue 158 of Linux Gazette, January 2009

News Bytes

By Deividson Luiz Okopnik and Howard Dyckoff

|

Contents:

|

Selected and Edited by Deividson Okopnik

Please submit your News Bytes items in

plain text; other formats may be rejected without reading.

[You have been warned!] A one- or two-paragraph summary plus a URL has a

much higher chance of being published than an entire press release. Submit

items to bytes@linuxgazette.net.

News in General

LPI to launch new LPIC-1 and LPIC-2 certification programs

LPI to launch new LPIC-1 and LPIC-2 certification programs

The Linux Professional Institute (LPI) has completed an update of the

exam objectives for its popular LPIC-1 and LPIC-2 certification

programs. New versions of these exams will be published and available

on April 1, 2009. Exams taken before that date will continue to be

based on the older, current objectives and materials.

"This update of our LPIC-1 and LPIC-2 certification program is a

significant milestone in LPI's history and exemplifies our extensive,

ongoing and transparent communications with industry leaders and the

Open Source community on what are the necessary skill sets for a Linux

professional," said Jim Lacey, President and CEO of the Linux

Professional Institute. Mr. Lacey noted many of the changes to the

exams are the result of extended consultations with partner

organizations in the field and others that support LPI's certification

program: "As an example, we have broadened the scope of the LPIC-1

exams to appeal to a wider range of Linux professionals while focusing

in-depth system administration and networking skills in the LPIC-2

certification."

Mr. Lacey also indicated that the increasing global relevance of the

LPIC program has made it necessary that these new exams are much more

sensitive to non-English exam candidates and include a greater number

of questions around localization, internationalization, and

accessibility issues.

Revised exam objectives for LPIC-1 and LPIC-2 are available on LPI's

public wiki:

https://group.lpi.org/publicwiki/bin/view/Examdev/WebHome.

The Linux Professional Institute also announced in December

improvements to its growing training partner program. LPI has more

than 220 approved training partners in 35 countries around the globe.

In the last six months alone, LPI has added training partners in

Belgium, Canada, Hong Kong, Italy, Korea, the Netherlands, the Philippines,

Slovakia, Spain, Switzerland, Tunisia, and the United Kingdom.

New Fedora 10 Adds Security and Virtualization

New Fedora 10 Adds Security and Virtualization

The Fedora Project, a community-supported open source collaboration

project, announced the availability of Fedora 10 in late November.

Fedora 10 is the tenth community release in five years. It

features substantial virtualization improvements for remote

installation and management of storage provisioning. These features

will make system administration of virtual machine hosts and guests

easier and more capable of automation, especially as they are

integrated into Red Hat Enterprise Linux. Additionally, Fedora 10

includes NetworkManager connection sharing, allowing laptop users with

an Ethernet or mobile broadband connection to provide Internet routing

to others through the laptop wireless interface, for instant

collaboration anywhere, anytime.

This release includes the premiere of a new graphical boot system

called Plymouth, designed to speed up the boot process by taking

advantage of a new kernel mode setting feature.

Fedora 10 also features a new security auditing and detection toolkit

called SecTool, and a brand-new set of Python bindings that allow

programmers to use the FIPS-140 validated NSS library for rapid

development of secure applications. The distribution also extends the

Live USB persistence feature that was pioneered in Fedora 9, adding

support for a separate user data area with optional encryption for the

ultimate experience in secure, portable computing.

Among its new features, Fedora 10 includes the new OpenOffice.org 3.0

and GNOME 2.24.1; a rewritten audio system for faster response and

lower power usage; a full set of appliance building tools; and support

for more PC hardware.

For more information on Fedora 10, to download the distribution or to

join this community effort, please visit http://fedoraproject.org/.

SUSE Linux Real Time Sets Performance Record

SUSE Linux Real Time Sets Performance Record

In independent tests performed by the Securities Technology Analysis

Center (STAC), SUSE Linux Enterprise Real Time delivered the lowest

mean and maximum latencies ever recorded with the Reuters Market Data

System (RMDS), as well as the highest RMDS throughput for a two-socket

server. These impressive results for Novell were significantly better

than similar benchmark tests performed by STAC on other Linux and UNIX

operating systems.

In its newly-released report, STAC measured the performance of a

market data stack based on the Reuters Market Data System when running

on SUSE Linux Enterprise Real Time and compared it to the same stack

using a general purpose operating system and gigabit Ethernet

technologies.

According to the independent tests performed by STAC, SUSE Linux

Enterprise Real Time delivered 10.1 million updates per second, the

highest throughput ever recorded on their standard benchmark test for

a two-socket Linux or UNIX system configuration - more than 670

percent higher than an alternative solution using a general purpose

operating system and gigabit Ethernet technologies. In the same tests,

SUSE Linux Enterprise Real Time achieved the lowest mean RMDS

latencies recorded to date by STAC on Linux or UNIX systems at nearly

all throughput rates. SUSE Linux Enterprise Real Time was able to

maintain under 0.67 milliseconds of mean latency at rates of up to

750,000 updates per second and maximum latencies never exceeded 3.01

milliseconds.

The tests were conducted using the latest HP blade servers and Intel

Quad-Core Xeon processors, along with Voltaire InfiniBand hardware and

Voltaire Messaging Accelerator (VMA) software. The specific solution

independently tested by STAC is available for customers to purchase

and implement today and is fully supported by Novell and its partners.

Flash Security Patch

Flash Security Patch

Adobe has issued critical security patches for the Linux

version of its Flash player. Older versions have a vulnerability can

be exploited through a specially crafted SWF file. The vulnerability

affects Adobe Flash Player versions 10.0.12.36 and earlier and

9.0.151.0 and earlier.

Linux users are urged to update the Flash Player on their systems to

version 10.0.15.3.

More information about this update can be found on http://www.adobe.com/support/security/bulletins/apsb08-24.html

Oracle Contributes Data Integrity Code to Linux Community

Oracle Contributes Data Integrity Code to Linux Community

To advance Linux use in enterprise, Oracle contributed block I/O

data integrity infrastructure code to the 2.6.27 Linux kernel in

December. This open source code, in part developed with Emulex,

exposes key data protection information to the Linux kernel. This can

enable subsystems for the first time to utilize crucial data integrity

features - and now extend protection from applications to storage to

Linux operating system - for comprehensive data integrity

capabilities across the entire software stack. This can reduce system

downtime and provides cost savings to end users while meeting more

stringent government regulations.

Oracle and Emulex are also working together to conduct an early

adopter program where a select group of customers can test the

end-to-end data integrity features in real world environments.

Additional Details:

* This code contribution includes generic support for data

integrity at the block and file system layers, as well as support

for the T10 Protection Information Model (formerly known as DIF)

and the Data Integrity Extensions.

* The Data Integrity Extensions, developed in collaboration with

Emulex, augment the T10 standard by allowing protection

information to be transferred to and from host memory, enabling

end-to-end data integrity protection.

* The Oracle-developed code is the first implementation of the T10

Protection Information Model standard for an operating system.

The INCITS/T10 "Protection Information Model" will help provide

end-to-end data protection for block devices. Read more at Oracle's page:

http://www.oracle.com/us/corporate/press/017754_EN

.

MySQL 5.1 GA Boosts Large-Scale Database Applications

MySQL 5.1 GA Boosts Large-Scale Database Applications

In December, Sun Microsystems announced general availability of MySQL

5.1, a major new release of the popular open source database. Designed

to improve performance and simplify management of large-scale database

applications, the production-ready MySQL 5.1 software is available for

download now at http://dev.mysql.com/downloads.

MySQL 5.1 was downloaded more than 250,000 times in first the ten days

it was available.

The MySQL 5.1 release is available via three models to serve different

users:

- MySQL Community Server - An open source version of Sun's MySQL

database. Licensed under the GPL, this full-function software is

targeted to a technical user who does not require commercial

support.

- MySQL Enterprise Server - Available as part of a MySQL

Enterprise subscription, this is the secure and up-to-date version

of the MySQL database, targeted to corporate IT users. Subscribers

receive monthly rapid software updates and quarterly service packs

along with access to proactive monitoring tools and 24x7

production technical support.

- MySQL Embedded Server - This commercially-licensed version of

the MySQL software allows ISVs and OEMs to embed or bundle a

high-speed, small-footprint database with their own products,

without the free-distribution requirements of the GPL license.

For downloads and more information on MySQL 5.1, go to

http://www.mysql.com/51.

Toshiba, IBM, and AMD Develop World's Smallest SRAM Cell

Toshiba, IBM, and AMD Develop World's Smallest SRAM Cell

Toshiba Corporation , IBM, and AMD have together developed a Static

Random Access Memory (SRAM) cell that has an area of only 0.128 square

micrometers (µm2), the world's smallest functional SRAM cell that

makes use of fin-shaped Field Effect Transistors (FinFETs). This cuts

the size of SRAM cells by more than 50% while improving memory

stability. The technology positions FinFETs as an attractive

structure for SRAMs in the 22nm node and beyond.

SRAM cells are circuit components in most systems-level, large-scale

integrated circuits such as microprocessors, and smaller SRAM cells

can support smaller, faster processors that consume less power. The

technology was announced on December 16 in a technical paper presented

at the 2008 International Electron Devices Meeting

(http://www.his.com/~iedm/general/) in San Francisco, California.

Researchers from the three companies fabricated a highly scaled FinFET

SRAM cell using HKMG (the high-k dielectric and metal gates process). It is the smallest nonplanar-FET SRAM cell yet

achieved: at 0.128µm2, the integrated cell is more than 50 percent

smaller than the 0.274µm2 nonplanar-FET cell previously reported. To

achieve this goal, the team optimized the processes, especially for

depositing and removing materials, including HKMG from vertical

surfaces of the non-planar FinFET structure.

The researchers also investigated the stochastic variation of FinFET

properties within the highly scaled SRAM cells and simulated SRAM cell

variations at an even smaller cell size. They verified that FinFETs

without channel doping improved transistor characteristic variability

by more than 28 percent. In simulations of SRAM cells of

0.063µm2

area, equivalent to or beyond the cell scaling for the 22nm node, the

results confirmed that the FinFET SRAM cell is expected to offer a

significant advantage in stable operation compared to a planar-FET

SRAM cell at this generation.

Conferences and Events

- FudCon Boston 2009

-

- January 9 - 11, MIT, Boston, MA

http://fedoraproject.org/wiki/FUDCon/FUDConF11

- SharePoint Technology Conference

-

- January 27 - 29, Airport Hyatt, Burlingame, CA

http://sptechcon.com/register.html

- Sun xVM Virtualization Roadshow

-

- January 27 - February 25, various US cities

http://www.xvmgetmoving.com/register.php

- 2009 SCADA and Process Control Summit

-

- February 2 - 3, Disney Dolphin, Lake Buena Vista, FL

http://www.sans.org/scada09_summit

- Black Hat DC 2009

-

- February 16 - 19, Hyatt, Arlington, VA

http://blackhat.com/html/bh-dc-09/bh-dc-09-main.html

- Gartner Mobile & Wireless Summit 2009

-

- February 23 - 25, Chicago, IL

http://gartner.com/us/wireless

- Next-Gen Broadband Strategies

-

- February 24, Cable Center, Denver, CO

http://www.lightreading.com/live/event_information.asp?event_id=29032

- 7th USENIX Conference on File and Storage Technologies (FAST '09)

-

February 24-27, San Francisco, CA

Learn from Leaders in the Storage Industry at the 7th USENIX Conference on

File and Storage Technologies (FAST '09)

Join us in San Francisco, CA, February 24-27, 2009, for the 7th USENIX

Conference on File and Storage Technologies. On Tuesday, February 24, FAST

'09 offers ground-breaking file and storage tutorials by industry leaders

such as Brent Welch, Marc Unangst, Simson Garfinkel, and more. This year's

innovative 3-day technical program includes 23 technical papers, as well as

a Keynote Address, Work-in-Progress Reports (WiPs), and a Poster Session.

Don't miss out on opportunities for peer interaction on the topics that

mean the most to you.

Register by February 9 and save up to $200!

http://www.usenix.org/fast09/lg

- DrupalCons 2009

-

- March 4 - 7, Washington, DC

http://dc2009.drupalcon.org/

- Unconference for technical product managers

-

- March 14, Yahoo!, Sunnyvale, CA

Register here

- Orlando Scrum Gathering 2009

-

- March 16 - 18, Gaylord Palms, Orlando, FL

http://www.scrumgathering.org

- Forrester's IT Infrastructure & Operations Forum

-

- March 18 - 19, San Diego, CA

http://www.forrester.com/events/eventdetail?eventID=2372

- EclipseCon 2009

-

- March 23 - 26, Santa Clara, CA

http://www.eclipsecon.org/2009/home

- ApacheCon Europe 2009

-

- March 23 - 27, Amsterdam, Nederlands

http://eu.apachecon.com/c/aceu2009

- Web 2.0 Expo San Francisco

-

- March 31 - April 3, San Francisco, CA

http://www.web2expo.com/

- SCALE Returns to LAX, Expands Tracks

-

SCALE 7x, the premier Open Source Community conference in the

southwestern United States, will be February 20th - 22nd, 2009. It

will also return to the Westin LAX Hotel, site of last year's 6th

Expo.

For 2009, the main weekend conference has been expanded: in addition

to the three main tracks, a Beginner's track and a Developer's track

have been added. SCALE will also reprise the Women In Open Source

("WIOS"), and Open Source Software In Education ("OSSIE") specialty

tracks on the Friday prior to SCALE.

DOHCS, the Demonstrating Open Source Health Care Solutions conference,

has spun off on its own but will be co-located with SCALE on the

Friday prior to SCALE. See their Web site at http://www.dohcs.org for

info and to register.

A Full Access conference pass (Friday - Sunday) cost only $60.

Register for SCALE 7x at http://www.socallinuxexpo.org.

Distro News

Jaunty Jackalope Alpha 2 released

Jaunty Jackalope Alpha 2 released

Jaunty Jackalope Alpha 2 is ready for testing; this release will in

time become the Ubuntu 9.04 release.

Alpha 2 is the second in a series of milestone CD images that will be

released throughout the Jaunty development cycle. The Alpha images are

thought to be free of show-stopping build or install bugs. The current

snapshots can download it here:

http://cdimage.ubuntu.com/releases/jaunty/alpha-2/ (Ubuntu)

http://cdimage.ubuntu.com/kubuntu/releases/jaunty/alpha-2/ (Kubuntu)

http://cdimage.ubuntu.com/xubuntu/releases/jaunty/alpha-2/ (Xubuntu)

Please refer to http://www.ubuntu.com/testing/jaunty/alpha2 for information on changes in Ubuntu.

Ubuntu 9.04 Alpha 3 is planned for mid-January 2009.

Good OS Announces gOS Cloud Operating System

Good OS Announces gOS Cloud Operating System

In November, Good OS, most known for its gOS Linux that debuted in

Wal-Mart computers, announced "Cloud," a new operating system that

boots into a browser with Google and Yahoo! in seconds and optionally

boots into Windows.

Good OS previewed Cloud on a GIGABYTE Touch-Screen Netbook at the

Netbook World Summit in Paris and online at http://www.thinkgos.com.

GIGABYTE Touch-Screen Netbooks will be preloaded with Cloud and

Windows XP together early in 2009.

Cloud integrates a Web browser with a compressed Linux operating

system kernel for immediate access to Internet, integration of browser

and rich client applications, and full control of the computer from

inside the browser, and features an attractive icon dock for shortcuts

to favorite apps and tabs for multi-tasking between Web and rich client

apps.

"Cloud is my favorite gOS product yet," said David Liu, Founder and

CEO of Good OS. "Just seconds after I power on, I'm Googling,

Yahooing, and truly Living. I am very excited about the upcoming

GIGABYTE Touch-Screen Netbooks with Cloud and Windows. I think the

GIGABYTE Touch-Screen Netbook's forward thinking hardware and software

will make it the best Netbook to hit the market!"

More details about Cloud and GIGABYTE Touch-Screen Netbooks will be

released on January at the Consumer Electronics Show in Las Vegas.

openSUSE 11.1 Improves User Experience

openSUSE 11.1 Improves User Experience

The openSUSE Project, a Novell-sponsored and community-supported open

source project, announced the release of openSUSE 11.1 in

mid-December. The new release delivers a richer user experience with

enhancements to desktop productivity, entertainment applications, and

software and system management.

openSUSE 11.1 was entirely developed using the recently released

openSUSE Build Service 1.0, a new collaboration system that enables

contributors to work closely together on Linux packages or solution

stacks. This allows quicker developer contributions to the openSUSE

project.

Updates to openSUSE 11.1 include:

- The new 2.6.27.7 Linux kernel which also adds support for a

number of new devices and improved video camera support;

- An improved remote desktop experience with Nomad;

- A simpler license that eliminates the EULA and removes software

which previously made it difficult to redistribute openSUSE;

- Improvements to YaST, openSUSE's system administration and

installation suite, including an improved partitioner, new printer

module, and a new module to check system security;

- Latest versions of major applications including Firefox 3.0.4,

OpenOffice.org 3.0, GNOME 2.24.1, KDE 4.1.3 + KDE 3.5.10 and Mono

2.0.1;

- Most recent versions of GNOME and KDE.

openSUSE 11.1 can be freely downloaded now at http://www.opensuse.org. A

retail box version is also available for $60 at http://shop.novell.com

and includes 90 days of installation support, commercial applications

not on the main DVD, and the popular "Getting Started Guide," a

printed manual for new Linux users.

Mandriva presents new Mandriva Flash 2009

Mandriva presents new Mandriva Flash 2009

Mandriva announced Mandriva Flash 2009, the new product in the popular

Mandriva Flash family. It enables mobility of an entire desktop and can be

run from any PC in just a few clicks. It is a fully operational system

requiring no installation and which fits into any pocket.

Mandriva Flash is a complete work station with the following software

for Linux:

- Mozilla Firefox and Thunderbird;

- Ezboot, to boot into Mandriva Flash from Windows;

- KDE 4, the advanced, attractive and efficient Linux desktop;

- Java and Flash help in Web content;

- Skype for phone calls over the Internet;

- Google Toolbar to make searching easier;

- OpenOffice.org - compatible with most widely used office suites.

This new version comes with a migration tool

enabling you to import Windows settings and documents.

Mandriva Flash 2009 is available in several languages. The new 8 GB

model is available now for $55 US and can be found on

http://store.mandriva.com/.

Slackware 12.2 now out

Slackware 12.2 now out

Slackware 12.2 was released in December with many improvements over

Slackware 12.1. Among the many program updates and distribution

enhancements are two desktop environments: Xfce 4.4.3 and KDE 3.5.10.

Slackware uses the 2.6.27.7 kernel with support for X DRI (the Direct

Rendering Interface) which brings high-speed hardware accelerated 3D

graphics to Linux.

Here are some of the advanced features of Slackware 12.2:

- The 2.6.27.7 version of the Linux kernel;

- System binaries are linked with the GNU C Library, version 2.7;

- Installs gcc-4.2.4 as the default C, C++, Objective-C, Fortran-77/95, and Ada 95 compiler;

- Apache (httpd) 2.2.10 Web server and PHP 5.2.8;

- PCMCIA, CardBus, USB, IEE1394 (FireWire) and ACPI support;

- New development tools, including Perl 5.10.0, Python 2.5.2, and much more

- Package tracking to help upgrade from Slackware 12.1 to Slackware

12.2. The slackpkg tool can also help update from an older version of

Slackware to a newer one

- Includes KDE's Konqueror 3.5.10, SeaMonkey 1.1.13 (this is the

replacement for the Mozilla Suite), and Firefox 3.0.4, as well as the

Thunderbird 2.0.0.18 email and news client with advanced junk mail

filtering.

Get Slackware 12.2 here: ftp://ftp.slackware.com/pub/slackware/slackware-12.2/

Software and Product News

Adobe AIR 1.5 Now Available for Linux

Adobe AIR 1.5 Now Available for Linux

In December, Adobe Systems announced availability of Adobe AIR 1.5

software for Linux operating systems. Adobe AIR 1.5, a key component

of the Adobe Flash Platform, enables Web developers to use HTML,

JavaScript, ActionScript and the free, open source Flex framework to

deliver Web applications outside the browser.

"The release of Adobe AIR 1.5 for Linux builds on our ongoing

commitment to the Linux community and our legacy of creating

technologies that work consistently across operating systems," said

David Wadhwani, general manager and vice president of the Platform

Business Unit at Adobe. "Using Adobe AIR, millions of Web developers

can now be Linux developers, and with little effort and no additional

costs, companies can now target emerging markets and a broad set of

new devices where Linux is growing in popularity." The graphical

capabilities of Adobe AIR, along with its access to operating system

functionality, give AIR applications the capacity to provide

unprecedented user experiences and brand differentiation for the

developers and companies creating them.

AIR 1.5 includes functionality introduced in Adobe Flash Player 10,

such as support for custom filters and effects, native 3D

transformation and animation, and extensible rich text layout.

Offering new features and performance improvements to create more

expressive AIR applications, Adobe AIR 1.5 incorporates the open

source WebKit HTML engine and now accelerates application performance

with "SquirrelFish," the new WebKit JavaScript interpreter. Adobe AIR

1.5 also includes an encrypted database for enterprise security

compliance requirements.

Adobe AIR 1.5 for Linux is available as a free download at

http://get.adobe.com/air/.

Ubuntu on Amazon EC2 Beta Released

Ubuntu on Amazon EC2 Beta Released

The Ubuntu team is now offering a beta release of Ubuntu 8.10 Server

Edition on Amazon's EC2 cloud computing service. This is the first beta

release of an Ubuntu Server Edition image optimised for EC2's cloud

computing environment.

This service allows users to create a fully running instance of Ubuntu

Server on EC2 in just a few clicks. All the applications, such as a

Web server, E-mail server and common development frameworks, are

available. There's no charge for using Ubuntu on Amazon EC2, it's

provided without charge complete with maintenance updates. However,

Amazon will charge EC2 users for their usage of the service.

Canonical has fully tested the images of Ubuntu Server Edition on

Amazon's EC2 service but they want wider testing from the community

and also want to learn how user interact with EC2 so they can make

improvements.

Apply for access to the EC2 beta program here:

http://www.ubuntu.com/ec2.

New VirtualBox 2.1 Improves Virtualization Performance

New VirtualBox 2.1 Improves Virtualization Performance

Sun Microsystems released a new version of Sun xVM VirtualBox, its

free and open source desktop virtualization software in December. This

new version offers improved support for VMware's virtual machine disk

format (VMDK) and Microsoft's virtual hard disk (VHD) file format,

allowing the transfer of critical business information. To download

the freely distributed xVM VirtualBox software, visit:

http://www.sun.com/software/products/virtualbox/get.jsp.

Users of xVM VirtualBox 2.1 software will benefit from significant

improvements in graphics and network performance, easier

configuration, hardware platform support for the latest processors and

additional interoperability. The update allows users to run a 64-bit

guest OS on 32-bit host platforms without the need to upgrade the host

OS. VirtualBox now offers improved performance on the new Intel Core

microarchitecture in the Core i7 processor family (codenamed Nehalem).

VirtualBox 2.1 features a number of new enhancements, including:

- New Accelerated 3D Graphics: Uses the industry standard

application programming interface (API) for high-performance

graphics, Open Graphics Library (OpenGL), allowing users to run

applications like Google Earth and CAM-based software used by

industrial designers, architects, etc.

- Improved Network Performance: Makes network intensive

applications like video faster. Bridged networking configurations

on Windows and Linux platforms makes it easier to deploy server

applications in virtual machines using Web stacks like LAMP or

SAMP.

- Better Storage Support: Comes with built-in iSCSI support to

connect to storage systems and enables easier management and

sharing of virtual disk images.

xVM VirtualBox software has been growing in popularity, surpassing 8

million downloads worldwide since October 2007.

JBoss Application Server gets Java EE 5 Compatibility

JBoss Application Server gets Java EE 5 Compatibility

After launching its JBoss Application Server 5.0 release from the

JBoss.org Community in November, the JBoss team has officially

confirmed that the JBoss Application Server is Java EE 5 Certified.

Read about the Java EE certification here:

http://java.sun.com/javaee/overview/compatibility.jsp

Enterprise support for JBoss Application Server 5 will be included in

JBoss Enterprise Application Platform v5, the enterprise release

expected early in 2009.

HP expands Linux offerings for business and education

HP expands Linux offerings for business and education

To provide customers with more cost-effective and secure computing

options, HP has introduced a new desktop offering with SUSE Linux

Enterprise Desktop from Novell on the HP Compaq dc5850. This joint

solution delivers a tightly integrated suite of essential

applications, including OpenOffice.org, Web browser, multimedia tools,

and email with collaboration and instant messaging software to drive

productivity for business customers.

For education customers, HP is working with Novell to develop and

maintain a repository of more than 40 applications, including math,

art and word games, to improve student learning. In addition,

applications for school administration and instruction will be

available for teachers and administrators.

The HP Compaq dc5850 with SUSE Linux Enterprise Desktop available Dec.

15 in North America at a U.S. street price of about $500.

Zmanda Launches Advanced Backup Based on OpenSolaris ZFS

Zmanda Launches Advanced Backup Based on OpenSolaris ZFS

Zmanda released both Amanda Enterprise and Zmanda Recovery Manager

(ZRM) for MySQL for the OpenSolaris 2008.11 platform, which was

announced by Sun Microsystems in December, and will be available in

OpenSolaris repositories.

The combination of Zmanda's backup solutions with Sun's ZFS file

system, creates an advanced backup-to-disk offering. Specifically, the

snapshot capability of ZFS enables fast and scalable backups of

demanding workloads.

ZRM for MySQL is a mission-critical backup and recovery solution

designed specifically for MySQL databases. ZRM includes unique,

enterprise-grade features such as MySQL storage engine and snapshot

intelligence, replication support, and integration of backup

operations with MySQL binary logs. ZRM comes with the Zmanda

Management Console - a powerful Web-based graphical user interface

that lets DBAs control backup and recovery across all MySQL servers

from any browser enabled device.

For more information about Zmanda, visit http://www.zmanda.com.

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/dokopnik.jpg)

Deividson was born in União da Vitória, PR, Brazil, on

14/04/1984. He became interested in computing when he was still a kid,

and started to code when he was 12 years old. He is a graduate in

Information Systems and is finishing his specialization in Networks and

Web Development. He codes in several languages, including C/C++/C#, PHP,

Visual Basic, Object Pascal and others.

Deividson works in Porto União's Town Hall as a Computer

Technician, and specializes in Web and Desktop system development, and

Database/Network Maintenance.

Howard Dyckoff is a long term IT professional with primary experience at

Fortune 100 and 200 firms. Before his IT career, he worked for Aviation

Week and Space Technology magazine and before that used to edit SkyCom, a

newsletter for astronomers and rocketeers. He hails from the Republic of

Brooklyn [and Polytechnic Institute] and now, after several trips to

Himalayan mountain tops, resides in the SF Bay Area with a large book

collection and several pet rocks.

Howard maintains the Technology-Events blog at

blogspot.com from which he contributes the Events listing for Linux

Gazette. Visit the blog to preview some of the next month's NewsBytes

Events.

Gnuplot in Action

By Philipp K. Janert

This article is based on Gnuplot in Action, to be published January 2009.

It is being reproduced here by permission from Manning Publications.

Manning early access books and ebooks are sold exclusively through Manning.

Visit the book's page for more information.

The purpose of computing is insight, not numbers.

-- R.W. Hamming

The purpose of computing is insight, not pictures.

-- L.N. Trefethen

Preface

I have been using gnuplot for 15 years, and it is an indispensable part of

my toolset: one of the handful of programs I cannot do without.

Initially, I used gnuplot as part of my academic research work as a

theoretical condensed matter physicist. But much later, when I joined

Amazon.com, I found myself using gnuplot again, this time to analyze the

movement of workers in Amazon's gargantuan warehouses and the distribution

of packages to customers. Later yet, I found gnuplot very helpful when

analyzing Web-traffic patterns for the Walt Disney Company.

I find gnuplot indispensable because it lets me see data, and to do so in

an easy, uncomplicated manner. Using gnuplot, I can draw and redraw graphs

and look at data in different ways. I can generate images of data sets

containing millions of points, and I can script gnuplot to create graphs

for me automatically.

These things matter. In one of my assignments, I was able to discover very

relevant information because I was able to generate literally

hundreds of graphs. Putting all of them on a Web page next to each

other revealed blatant similarities (and differences) between different

data sets - a fact that had never been noticed before, not least because

everybody else was using tools (mostly Excel) that would only allow graphs

to be created one at a time.

Gnuplot has always been popular with scientists all over - I hope to

convince you that it can be useful to a much larger audience. Business

analysts, operations managers, database and data warehouse administrators,

programmers: really anybody who wants to understand data with graphs.

I'd like to show you how to do it.

Prelude: Understanding Data with Graphs

Gnuplot is probably the most widely used open-source program to plot and

visualize data. In this book, I want to show you how to use gnuplot to make

plots and graphs of your data: both quick and easy graphs for your own use,

and highly polished graphs for presentations and publications.

But I also want to show you something else: how to solve data analysis

problems using graphical methods. The art of discovering relationships in

data and extracting information from it by visual means is called

"graphical analysis". I believe gnuplot to be an excellent tool with which to

do it.

As a teaser, let's take a look at some problems and how we might be able to

approach them using graphical methods. The graphs here and in the rest of

the book (with very few exceptions) have been, of course, generated with

gnuplot.

A Busy Weekend

To get a feeling for the kinds of problems that we may be dealing with, and

for the kinds of solutions that gnuplot can help us find, let's look at two

examples. Both take place during a long and busy weekend.

Planning a Marathon

Imagine you are in charge of organizing the local city marathon. There will

be over 2000 starters, traffic closed around the city, plenty of spectators

- and a major Finish Line Festival to celebrate the victors and help the

wounded. The big question is: When should the Finish Line crew be ready to

deal with the majority of runners? At what point do we expect the big

influx of the masses?

You have available to you the results from last year's event. Assuming that

the starters have not improved dramatically over the last year (probably a

safe assumption!), you do a quick average on the completion times and find

that last year's average was 172 minutes. To be on the safe side, you

calculate the standard deviation as well, which comes out to about 15

minutes. So you tell your crew to be ready for the big rush starting two

and a half hours (150 minutes) after the start, and feel reasonably well

prepared for the event.

So it comes as a bit of a surprise when on the big day plenty of runners

start showing up on the finish line after only 130 minutes - a good twenty

minutes earlier than the expected onset of the rush. In terms of event

management, 20 or 30 minutes are not catastrophic, yet it is a bit strange.

The next day you wonder: What went wrong?

Let's take a look at the data to see what we can learn about it. So far,

all we know of it is the mean and the standard deviation.

The mean is convenient: It is easy to calculate and it summarizes the

entire data set in a single number. But in forming the mean, we lost a lot

of information. To understand the whole data set, we will have to

look at it. And since we can't understand data by looking at more

than 2000 individual finish times, this means we will have to plot

it.

It will be convenient to group the runners by completion time and to count

the number of participants that completed during each full minute. The

resulting file might start like this:

# Minutes Runners

133 1

134 7

135 1

136 4

137 3

138 3

141 7

142 24

...

Now we plot the number of runners against the completion.

Figure 1.1. Number of finishers vs. time to complete (in minutes).

It is immediately obvious where we went wrong: the data is bi-modal,

meaning it has two peaks. There is an early peak at around 150 minutes, and

a later main peak at 180 minutes.

Actually, this makes good sense: A major sporting event such as a city

marathon attracts two very different groups of people: "athletes", who

train and compete throughout the year and are in it to win, and a much

larger group of "amateurs", who come out once a year for a big event and

are mostly there to participate.

The problem is that for such data the mean and standard deviation are

obviously bad representations - so much so that at the time when we

expected the big rush (170 minutes), there is actually a bit of a lull at

the finish line!

The take-home message here is that it is usually not a good idea to rely on

summary statistics (such as the mean) for unknown data sets. We

always should investigate what the data looks like. Once we have

confirmed the basic shape, we can choose how to summarize our findings

best.

And of course, there is always more to learn. In this example, for

instance, we see that after about 200 minutes almost everybody has made it,

and we can start winding down the operation. The actual "tail" of the

distribution is quite small - actually a bit surprisingly so (I would have

expected to see a greater number of real "stragglers", but possibly many

runners that are really slow drop out of the race when they

realize they will place badly).

Using Gnuplot

Let's take a look at the gnuplot command that was used to generate figure

1.1. Gnuplot is command-line oriented: after you start gnuplot, it drops

you into an interactive command session, and all commands are typed at the

interactive gnuplot prompt.

Gnuplot reads data from simple text files, with the data arranged in

columns as shown above. To plot a data file takes only a single command:

plot, like this:

plot "marathon" using 1:2 with boxes

The plot command requires the name of the data file as

argument in quotes. The rest of the command line specifies which columns

to use for the plot, and in which way to represent the data. The

using 1:2 declaration tells gnuplot to use the first and

second column in the file called marathon. The final part of

the command: with boxes, selects a box style, which is often

suitable to display counts of events, as in this case.

Gnuplot handles most everything else by itself: it sizes the graph and

selects the most interesting plot range, it draws the border, and it draws

the tic marks and their labels. All these details can be customized, but

gnuplot typically does a good job at anticipating what the user wants.

1.1.2. Determining the Future

The same weekend that 2000 runners are running through the city, a diligent

graduate student is working on his research topic. He studies Diffusion

Limited Aggregation (DLA), a process wherein a particle performs a random

walk, until it comes in contact with a growing "cluster" of particles. At

the moment of contact, the particle sticks to the cluster at the location

where the contact occurred and becomes part of the cluster. Now, a new

random walker is released to perform random walk, until it sticks to the

cluster. And so on.

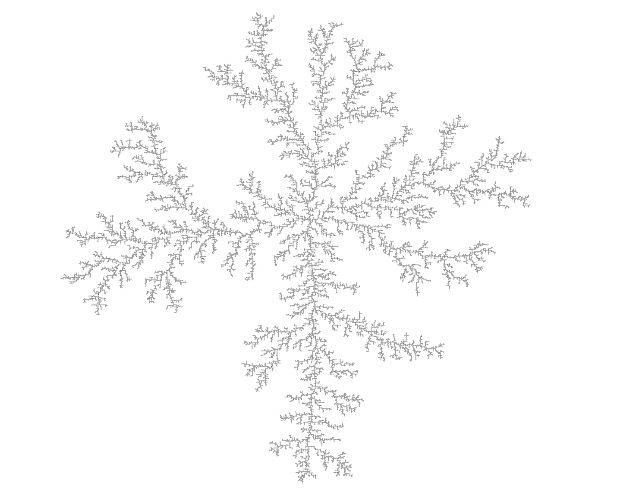

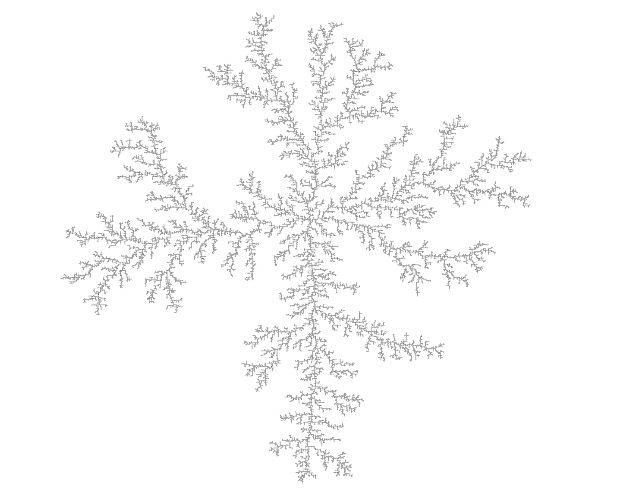

Clusters grown through this process have a remarkably open, tenuous

structure (cf. figure 1.2). DLA clusters are fractals, but rather little is

known about them with certainty.[1]

Figure 1.2. A DLA cluster of N=30,000 particles, drawn with gnuplot.

The DLA process is very simple, so it seems straightforward to write a

computer program to grow such clusters in a computer, and this is what our

busy graduate student has done. Initially, all seems well, but as the

simulation progresses, the cluster seems to grow more and more slowly.

Excruciatingly slowly, in fact. The goal was to grow a DLA cluster of

N=100,000 particles - will the program ever finish?

Luckily, the simulation program periodically writes information about its

progress to a log file: for each new particle added to the cluster, the

time (in seconds) since the start of the simulation is recorded. We should

be able to predict the completion time from this data, but an initial plot

(figure 1.3) is just not very helpful: there are just too many ways that

this curve can be extrapolated to larger cluster sizes.

Figure 1.3. Time required to grow a DLA cluster.

However, the time consumed by many computer algorithms grows as a simple

power of the size of the problem. In our case, this would be the number N

of particles in the cluster: T ~ Nk, for some value of k.

Our research student therefore plots the running time of his simulation

program on a double logarithmic plot versus the cluster size - and, lo, the

data falls on a straight line, indicating power law behavior. (I will

explain later how and why this works.) Through a little trial and error, he

also finds an equation that approximates the data quite well. The equation

can be extended to any cluster size desired and will give the time

required. For N=100,000 (which was the original goal), we can read off

T=300,000 seconds (or more), corresponding to 83 hours or four days, so we

can tell our friend that there is no point in spending the weekend in the

lab - he should go out (maybe run a Marathon), and come back on Monday. Or

perhaps work on a better algorithm. (For simulations of DLA cluster growth,

dramatic speedups over the naive implementation are possible. Try it if you

like.)

Figure 1.4. Time required to grow a DLA cluster in a double-logarithmic plot, together with an approximate mathematical model.

1.1.2.1. Using Gnuplot

Again, let's see how the graphs in this section were created. The easiest

to understand is figure 1.3. Given a file containing two columns, one

giving the cluster size and the other giving the completion time, the

command is simply:

plot "data" using 1:2 with lines

The only difference compared to figure 1.1 is the style: rather than boxes,

I use line segments to connect consecutive data points, hence: with lines.

Did you notice that figure 1.3 and figure 1.4 contain more than just data?

Both axes are now labelled! Details such as labels and other helpful

decorations often make the difference between a mediocre and a high-quality

graph, because they provide the observer with the necessary context to

fully understand the graph.

In gnuplot, all details of a graph's appearance are handled by setting the

appropriate options. To place the labels on the x- and y-axes in figure

1.3, I used:

set xlabel "Cluster Size"

set ylabel "Run Time [sec]"

Figure 1.4 is drawn using double-logarithmic axes. This is yet another

option, which is set as follows:

set logscale

Figure 1.4 shows two curves: the data together with a best "fit". Plotting

several data sets or mathematical functions together in one plot is very

simple: we just list them one after another on the command line for the

plot command:

plot "runtime" using 2:6 title "data" with lines, #

# 1.2*(x/1000)**2.7 title "model"

This command introduces a further gnuplot feature: the title

directive. It takes a string as argument, which will be displayed together

with a line sample in the plot's key or legend (visible in the upper left

of figure 1.4).

Finally, we come to figure 1.2. That's a somewhat different beast. You will

notice that the border and the tic marks are missing. The aspect ratio (the

ratio of the graph's width to its height) has been constrained to 1, and a

single dot has been placed at the position of each particle in the cluster.

Here are the the most important commands that I used:

unset border

unset xtics

unset ytics

set size square

plot "cluster" using 1:2 with dots

You see that gnuplot is really very simple to use. In the next section, I'd

like to talk more about using graphical methods to understand a data set,

before coming back to gnuplot and discussing why it is my favorite tool for

this kind of activity.

1.2. What is Graphical Analysis?

These two examples should have given you an idea what Graphical Analysis is

and how it works. The basic steps are always the same:

- Plot the data.

- Inspect it, trying to find some recognizable behavior.

- Compare the actual data to data that represents the hypothesis from the

previous step (e.g. in the second example above, we plotted running time of

the simulation program together with a power-law function).

- Repeat.

We may try more sophisticated things, but this is the basic idea. If the

hypothesis in step two seems reasonably well justified, we will often try

and remove its effect, for instance by subtracting a formula from

the data, to see whether there is any recognizable pattern in the residual.

And so on.

Iteration is a crucial aspect of graphical analysis: plotting the data this

way and that way, comparing it to mathematical functions or to other data

sets, zooming in on interesting regions or zooming out to detect the

overall trend, applying logarithms or other data transformations to change

its shape, using a smoothing algorithm to tame a noisy data set... During

an intense analysis session using a new but promising data set, it is not

uncommon to produce literally dozens of graphs.

None of these graphs will be around for long. That's because they are

transient, persisting just long enough for us to form a new hypothesis,

which we'll try to justify in the next graph we may draw. This also means

that these graphs will not be "polished" in any way, since they are the

graphical equivalent to scratch paper: notes of work in progress, not

intended for anyone but oneself.

This isn't to say that polishing doesn't have its place. But it comes

later in the process: once we know what the results of our analysis are, we

need to communicate them to others. At this point, we will create

"permanent" graphs, which will be around for a long time - maybe until the

next departmental presentation, or (if the graph will be part of a

scientific publication, for instance), forever!

Such permanent graphs have different requirements: other people must be

able to understand them, possibly years later, and most likely without us

there to explain them. Therefore, graph elements like labels, captions, and

other contextual information become very important. Presentation graphs

must be able to stand by themselves. Presentation graphs also should make

their point most clearly. Now that we know what the results of our analysis

are, we should find the clearest and most easily understood way of

presenting our findings. A presentation graph should make one point and

make it well.

Finally, some would argue that a presentation graph should "look good".

Maybe. If it makes its point well, there is no reason why it shouldn't be

visually pleasing as well. But that is an afterthought. Even a presentation

graph is about the content, not the packaging!

1.2.1. Data Analysis and Visualization Concepts

Data analysis and visualization is a broad field. Besides different

graphical approaches, there are of course also other methods, which may do

entirely without visual help. I think it will help to introduce and

distinguish a number of terms and concepts for different activities in data

analysis. At times the boundaries between these different concepts may be a

little fuzzy, but I think the overall distinctions are quite clear.

- Graphical Analysis

-

Graphical analysis is an investigation of data using graphical

methods. The purpose is the discovery of new information about the

underlying data set. In graphical analysis, the "proper" question to ask is

often not known from the outset, but is discovered as part of the analysis

process.

- Presentation Graphics

-

In contrast to graphical analysis, presentation graphics is

concerned with the communication of information and results that are

already understood: the discovery has been made, now it merely needs to be

communicated clearly!

- Control Charts

-

I use the term "control chart" somewhat loosely for

situations where we already know the questions to ask of the data (as in

the case of presentation graphics), but where the primary audience for the

graph is not the public, but the people who created the data themselves.

Besides classical control charts (for example in quality engineering), many

plots of experimental data fall into this category, because the question is

determined at the outset and the graph is drawn to extract specific values

to answer it.

- Reality Representation

-

What graphical analysis, presentation graphics, and control

charts have in common is that they are "digital": some aspect of reality

has been measured and translated into numbers, and it is these numbers

which are plotted (temperature, stock price, electric field strength,

response time, ... whatever).

Reality representation by contrast tries to construct an image that is in

some form analogous to the system under consideration. A regular

topographic map is a simple form of reality representation. More complex

computer-assisted methods include three-dimensional solid body imaging,

many ray-tracing systems, most immersive "virtual reality" methods, and

many network flow or relationship connectivity visualization systems.

Data analysis using reality representation is a large, amorphous, and

highly experimental field.

- Image Analysis

-

Image analysis takes a two- or (rarely) three-dimensional image of the

system under investigation and tries to detect significant structure in

this image, often using color variations to indicate changes in value -

think medical imaging. Image analysis may either be highly automated (using

signal-processing methods), or be done visually. In the latter case, it

shares aspects with graphical analysis.

- Statistical Analysis

-

This is the "classical" definition of data analysis. Statistical analysis

typically tries to characterize a data set by calculating some mathematical

quantity (such as the mean, the median, or the standard deviation) from the

data. Statistical analysis gives a quantitative answer to a known,

well-posed question.

Statistical analysis works great if we know what questions to ask of the

data, and if we want to perform essentially similar analyses repeatedly

(for instance after varying some control parameter in a prescribed

fashion). But it is not applicable if the questions to ask is yet unknown,

and it can be misleading even otherwise, as our Marathon example has shown:

statistical analysis always makes some (silent) assumptions about the data,

which may not be fulfilled in practice. These challenges are well-known in

the statistical community.

- Exploratory Data Analysis

-

Exploratory (or Initial) Data Analysis (EDA or IDA) is a term sometimes

used in the statistical literature to describe the initial examination of

data to determine its basic characteristics. Graphs typically play a large

role. What makes it different from graphical analysis is that it is only

seen as precursor to a "real" formal statistical analysis.

1.2.2. Why Graphical Analysis?

Graphical analysis is a discovery tool: we can use it to reveal as yet

unknown information in data. In comparison to statistical methods, it helps

us to discover new and possibly quite unexpected behavior.

Moreover, it helps us to develop an intuitive understanding of the data and

the information it contains. Since it does not require particular math

skills, it is accessible to anyone with an interest and a certain amount of

intuition.

Even if rigorous model-building is our ultimate goal, graphical methods

still need to be the first step, so that we can develop a sense for the

data, its behavior and quality. Knowing this, we can then select the most

appropriate formal methods.

1.2.3. Limitations of Graphical Analysis

Of course, graphical analysis has limitations and its own share of

problems.

Graphical analysis doesn't scale. Graphical analysis is a manual

process, which can't easily be automated. Each data set is treated as a

separate special case, which is not feasible if there are thousands of data

sets.

But this problem is sometimes more apparent than real. It can be remarkably

effective to generate a large number of graphs and just browse them without

studying each one in great depth. It is totally possible to scan a few

hundred graphs visually, and doing so may already lead to a high-level

hypothesis regarding the classification of the graphs into just a few

sub-groups, which can then be investigated in detail. (Thank goodness

gnuplot is scriptable, so that preparing a few hundred graphs poses no

problem.)

Graphical analysis yields qualitative, not quantitative results.

Whether you regard this as a strength or a weakness depends on your

situation. If you are looking for new behavior, graphical analysis is your

friend. If you are trying to determine by how many percent a new fertilizer

treatment increases crop production, statistical analysis is the way to go.

It takes skill and experience. Graphical analysis is a creative

process, using inductive logic to move from observations to hypothesis.

There is no pre-scribed set of steps to move from a data set to conclusions

about the underlying phenomena and not much that can be taught in a

conventional, class-room format.

But by the same token, it does not require formal training, either.

Ingenuity, intuition, and curiosity (!) are the most important character

traits. Any one can play this game, if they are interested in finding out

what the data tries to tell them.

1.3. What is Gnuplot?

Gnuplot is a program to explore data graphically. Its purpose is to

generate plots and graphs from data or functions. It can produce highly

polished graphs, suitable for publication, and simple throw-away graphs,

when we are merely playing with an idea.

Gnuplot is command-line driven: you issue commands at a prompt, and gnuplot

will redraw the current plot in response. Gnuplot is also interactive: the

output is generated and displayed immediately in an output window. Although

gnuplot can be used as a background process in batch-mode, typical use is

highly interactive. On the other hand, its primary user interaction is

through a command language, not through a point-and-click GUI interface.

Don't let the notion of a command-language throw you: gnuplot is easy to

use - really easy to use! It takes only one line to read and plot

a data file, and most of the command syntax is straightforward and quite

intuitive. Gnuplot does not require programming, or any deeper

understanding of its command syntax to get started.

So, this is the fundamental workflow of all work with gnuplot: plot,

examine, repeat - until you have found out whatever you wanted to learn

from the data. Gnuplot supports the iterative process model required for

exploratory work perfectly!

1.3.1. Gnuplot is not GNU

To dispel one common confusion right away: gnuplot is not GNU software, has

nothing to do with the GNU project, and is not released under the GNU

Public License (GPL). Gnuplot is released under a permissive open-source

license.

Gnuplot has been around a long time - a very long time, in fact. It was

started by Thomas Williams and Colin Kelley in 1986. On the gnuplot FAQ,

Thomas has this to say about how gnuplot was started and why it is called

the way it is:

I was taking a differential equation class and Colin was taking

Electromagnetics, we both thought it'd be helpful to visualize the

mathematics behind them. We were both working as sys admin for an EE VLSI

lab, so we had the graphics terminals and the time to do some coding. The

posting was better received than we expected, and prompted us to add some,

albeit lame, support for file data. Any reference to GNUplot is incorrect.

The real name of the program is "gnuplot". You see people use "Gnuplot"

quite a bit because many of us have an aversion to starting a sentence with

a lower case letter, even in the case of proper nouns and titles. gnuplot

is not related to the GNU project or the FSF in any but the most peripheral

sense. Our software was designed completely independently and the name

"gnuplot" was actually a compromise. I wanted to call it "llamaplot" and

Colin wanted to call it "nplot." We agreed that "newplot" was acceptable

but, we then discovered that there was an absolutely ghastly pascal program

of that name that the Computer Science Dept. occasionally used. I decided

that "gnuplot" would make a nice pun and after a fashion Colin agreed.

For a long time (about ten years), the stable major version of gnuplot was

version 3.7.x, until version 4.0.0 was released in 2004. As part of the 4.x

release, gnuplot has acquired a number of useful new features, including:

- palette-mapped color mode (pm3d), which makes it possible to choose

colors for plots from continuous, user-defined color gradients

("palettes").

- much better text-handling capabilities, including the ability to read

text from a file and use text as a plot style, support for common string

functions and "enhanced" text mode, allowing the use of formatting commands

and special characters in gnuplot graphs.

- new plot styles: filled curves and boxes, histograms, and vectors.

- improved output handling, including an entirely new interactive

terminal based on the wxt widget set using the Cairo and Pango graphics and

font libraries, resulting in a dramatically improved visual appearance over

previous interactive gnuplot terminals. There are also many marginal

improvements to other terminals, including a unified treatment of common

pixmap file formats (GIF, PNG, JPG) using libgd.

- ... and many more.

The current release of gnuplot is version 4.2.3 (released in March 2008).

Gnuplot continues to be actively developed - if you would like to

contribute, subscribe to the developers' mailing list